Edge of the Universe is a modular interactive instrument exploring themes of time and space through live audio-visual performance. A collaboration between experience lab Odd Division and the band Little Dragon, the installation uses an array of Samsung Galaxy S6 Edge phones inside of a prototype flying saucer to take a journey to the Edge of the Universe and back.

Sampling past audio recorded by NASA in space and present audio recorded on earth, the experience is part relic and part looking-glass. The audience is invited on an immersive and intimate journey with Little Dragon to the limits of the universe and back through this homage to space travel and the future of mobile synthesizers.

Edge of the Universe was performed by Little Dragon at the New Museum Sky Room in New York City.

As an installation, it was open to the public as part of the New Museum Incubator end of year showcase at RedBull Studios in New York.

Left: Little Dragon performing Edge of the Universe at the New Museum Sky Room.

Top: Jeff Crouse, Mau Morgo and myself monitoring LED's, graphics and sound during the performance.

The team presents the installation version at Red Bull Studios in Chelsea.

Process

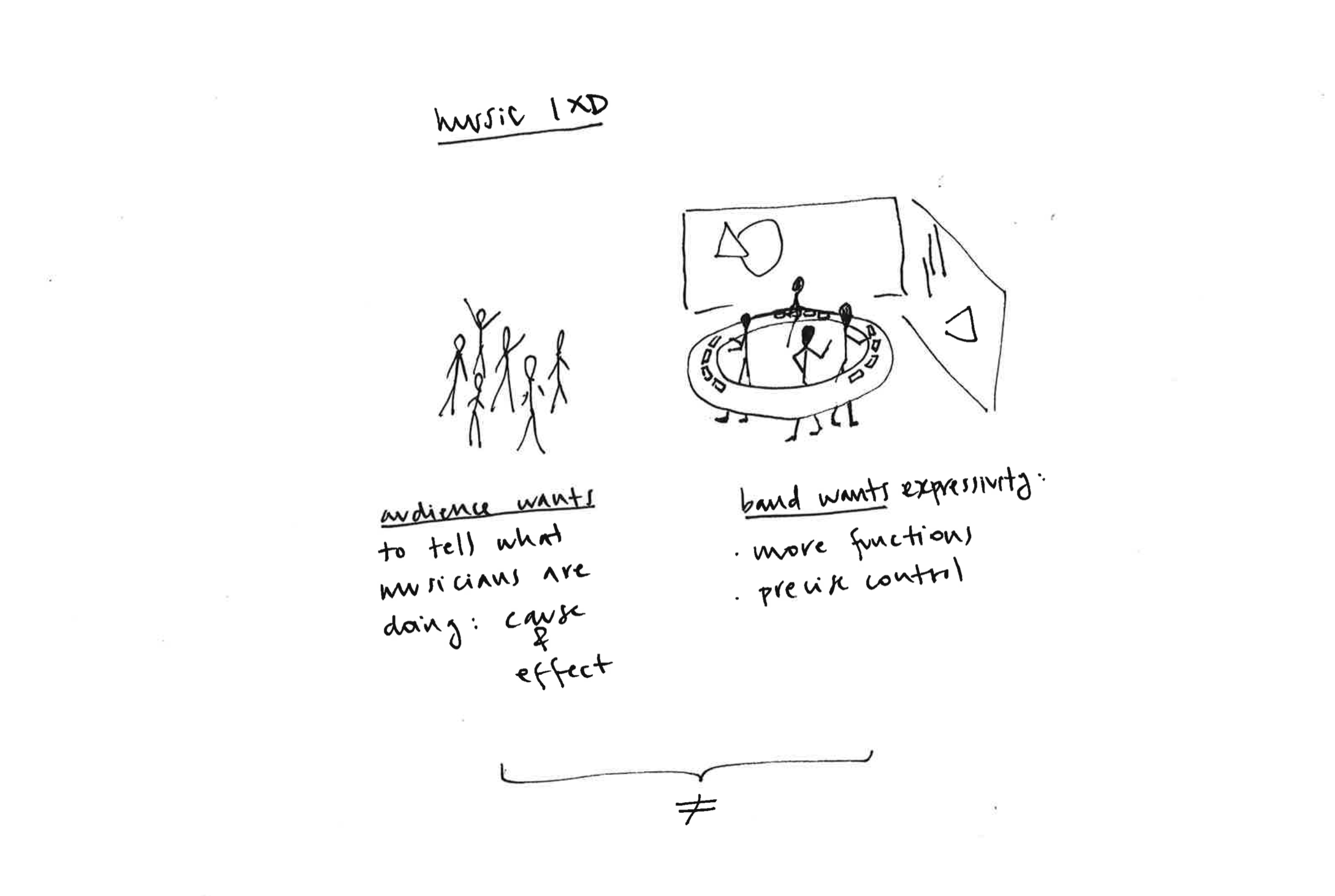

My role was leading the musical design and implementation of the instrument. I worked with the Odd Division team and Little Dragon on developing the concept and design of the installation, making sure that it would be a playable, expressive, and quickly learnable musical instrument.

Implementation included creating custom applications for Android (using Cordova, with graphics designed by Mau Morgo and implemented in WebGl by Lars Berg) and a custom MIDI Bridge application (built with Node.js). I also worked closely with the band on defining specific interfaces, notes and sounds for each one of the five songs they performed at the New Museum show (custom apps + Ableton Live).

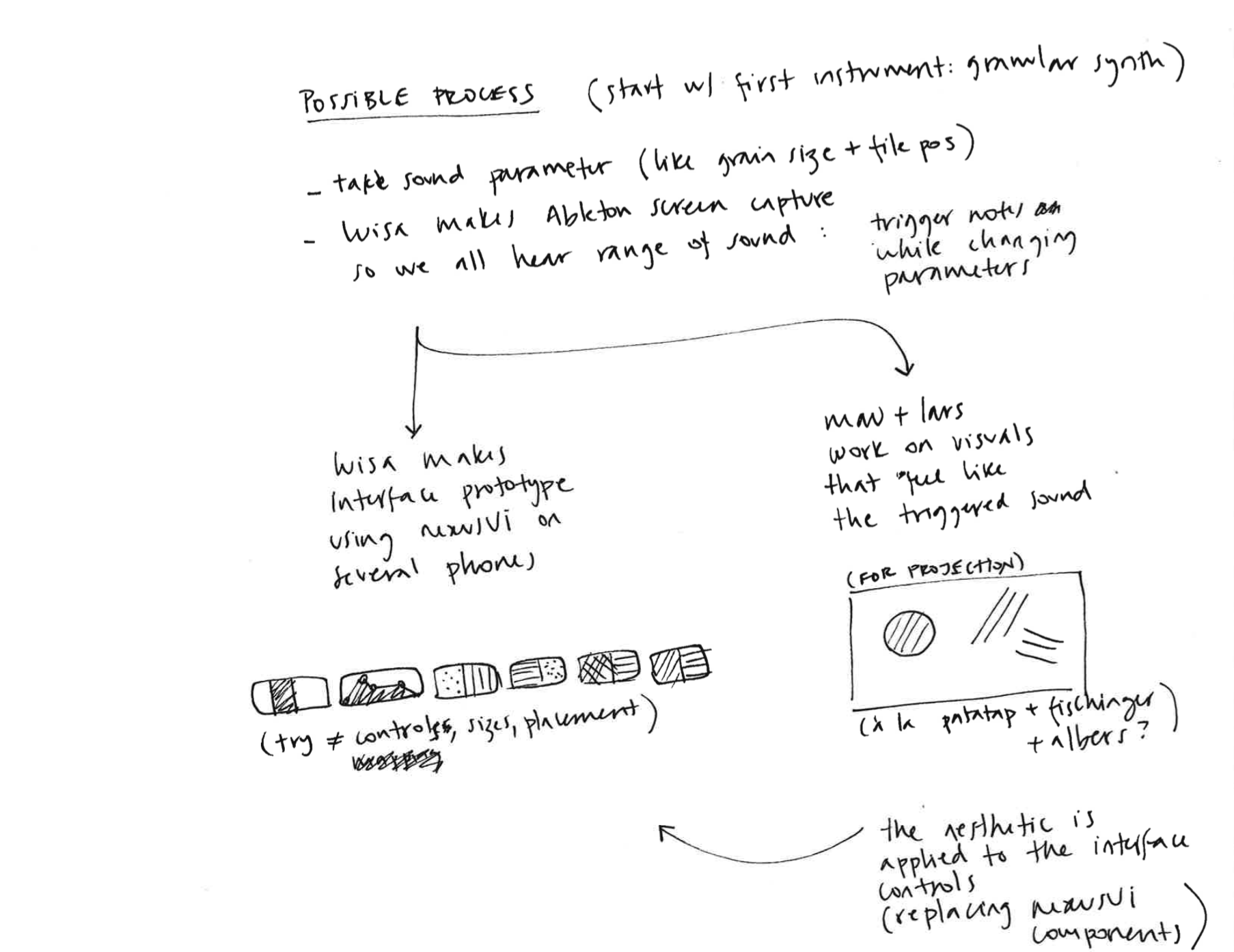

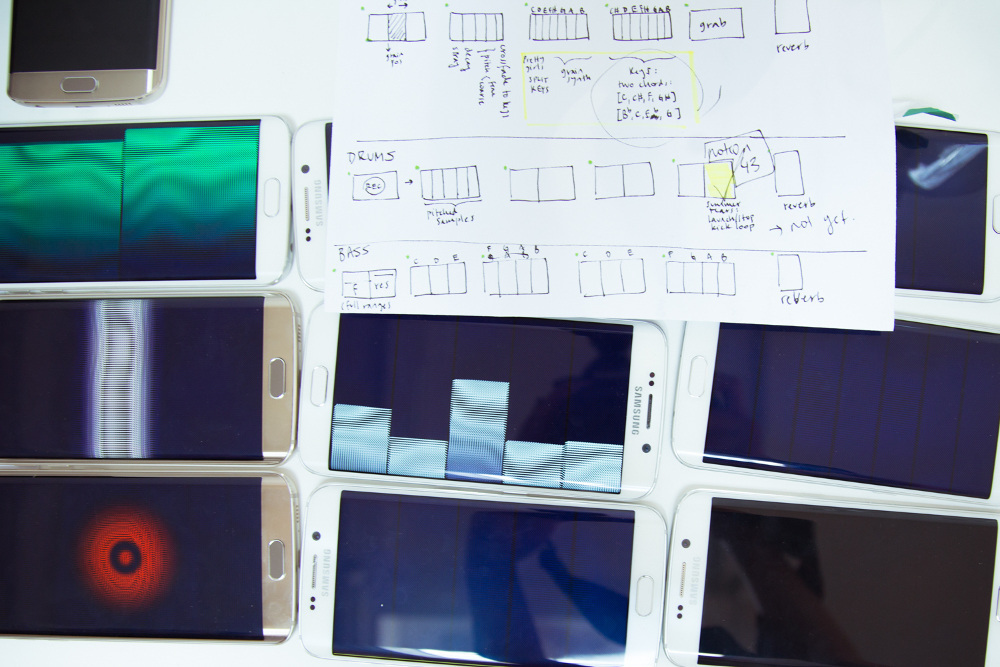

Sketches from the concept design phase

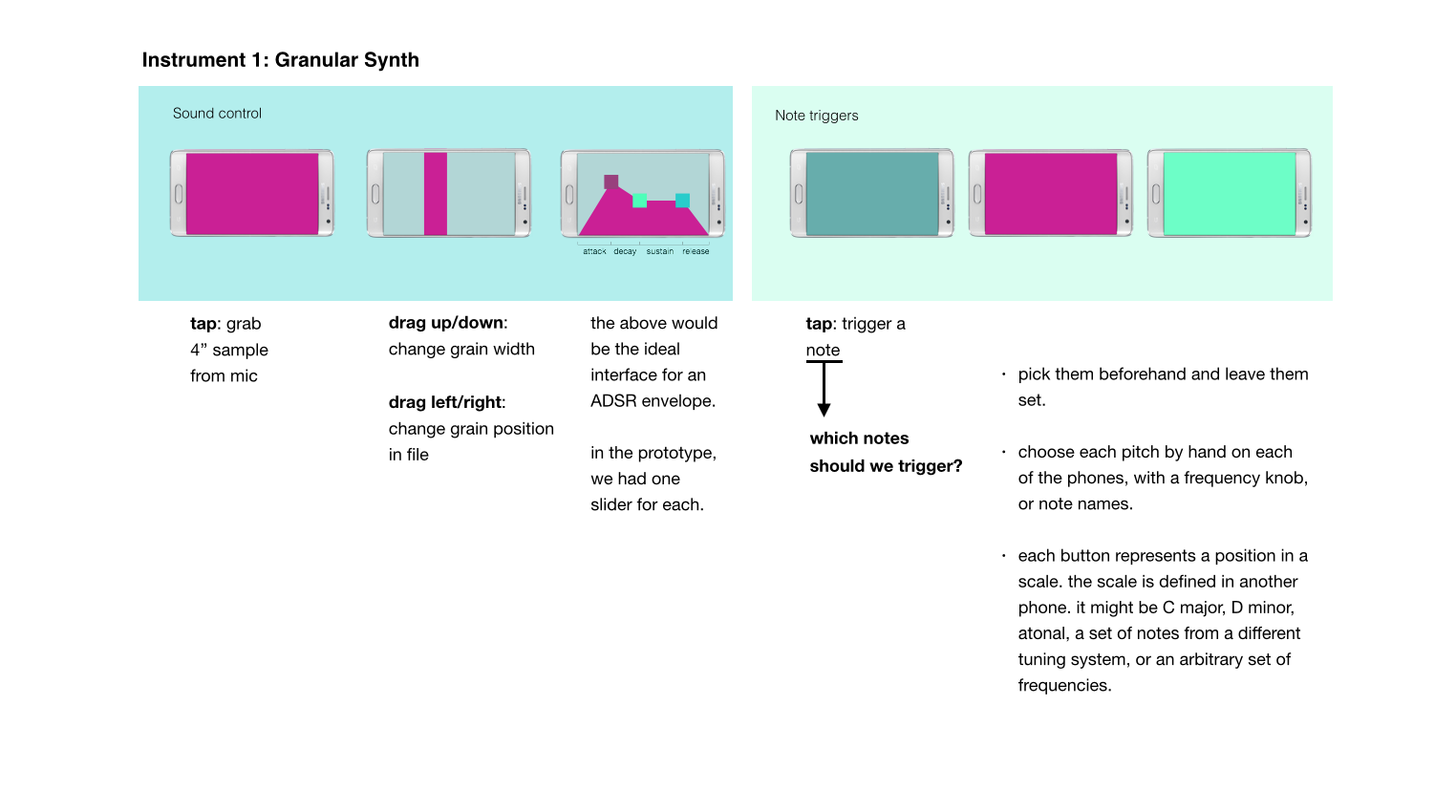

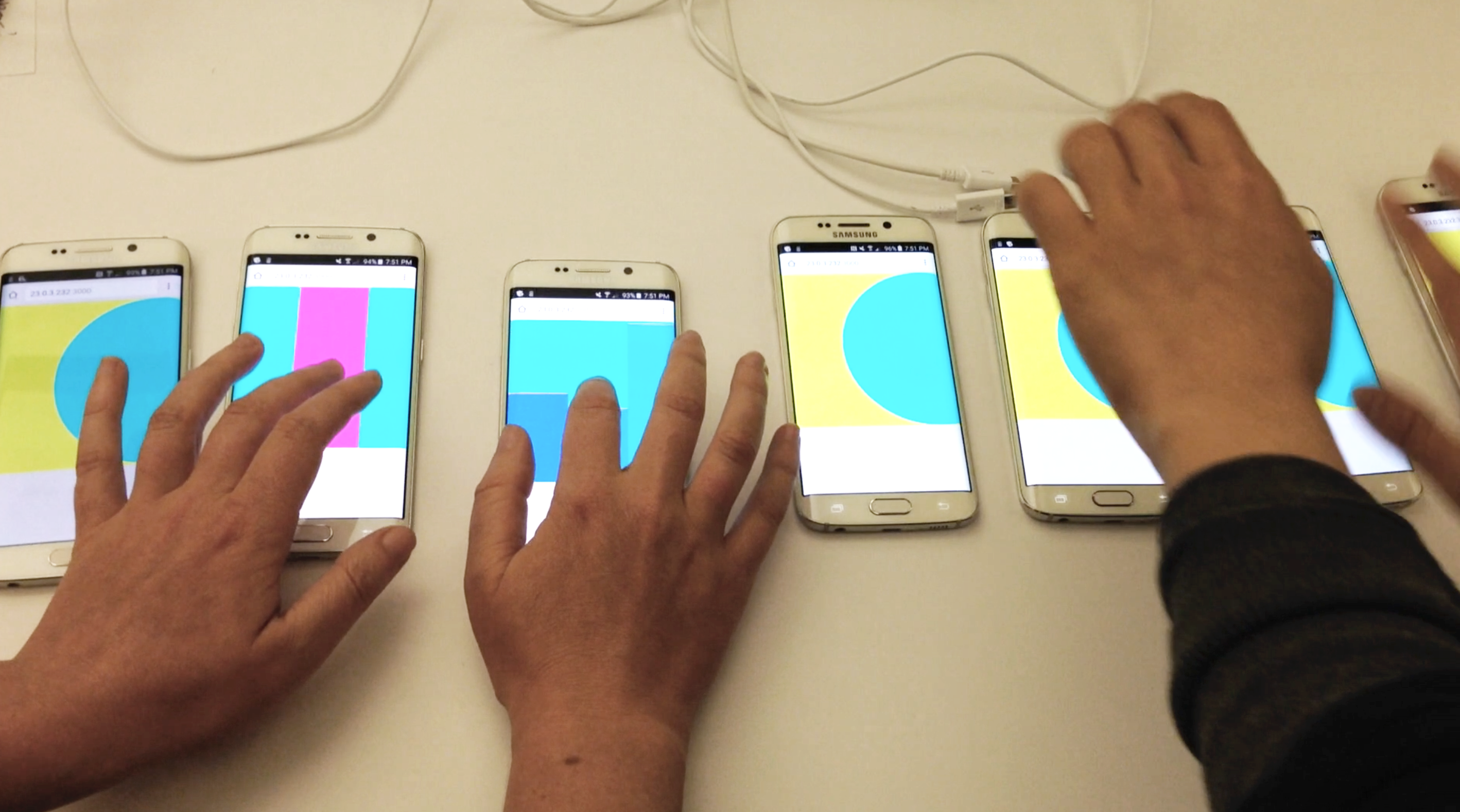

Top: Design and testing of the first prototype.

Right: Rehearsal mapping, with final visual treatment.

How it works

There are 4 custom software applications, 5 Ableton Live arrangements, and 1 LED system that make up Edge of the Universe.

The LED system was designed using 28 meters of neopixels LED strips and 4 fadecandy controllers. The Fadecandy controllers made it fairly easy to create highly-synchronized animations that both had a life of their own to create the effect of spaceship lights, and also responded to what the band was doing on the phones.

Ableton Live is a powerful musical production program that is particularly popular with live performers, and, more importantly to us, extremely flexible when it comes to mapping controls from outside sources. We produced an Ableton Live project for each of the 5 songs that Little Dragon performed, some which allowed live sampling, granular synthesis, applying effects and other controls in real time, and, of course, triggering sounds such as drums, keys, and bass.

The Software:

- A custom Android app was developed using Cordova - a platform for building native mobile applications using web tools. This application includes 18 distinct WebGL interfaces built on top of NexusUI instruments (a JavaScript library of audio interface components); 6 for bass, 6 for keys, and 6 for drums. The instruments range from traditional keyboards to toggle buttons to tilt-sensitive inputs. When these interfaces are used by the band (a key is pressed, a drum is tapped, a phone is tilted), they broadcast the OSC messages (a tiny, very fast network protocol) that drive the entire show. All of the following applications listen for these messages and interpret them in different ways.

- The MIDI Bridge is a small node.js program that listens for the OSC messages coming from the phones and translates them into MIDI messages that are sent directly to the painstakingly-mapped Ableton Live projects to trigger notes and effects. This intermediate layer between the phones and Ableton Live made it easier for us to make the interfaces general purpose, while customizing how exactly they worked behind the scenes from song to song.

- The LED control software is written in Processing and listens for the OSC messages in order to light up the LEDs in front of the phones from which they come. Additionally, it has several controls that allow an operator to tweak and manipulate other LED animations that occur during the show.

- Projection visuals: The final custom application is the projection visuals, which is a WebGL front end with a node.js/express/socket.io back end. It listens for the OSC messages and forwards them to the WebGL graphics to provide a real-time representation of what the band is doing. Using a web server approach allowed us to easily run the projection on a couple of computers to power the 3 projectors at the show. Additionally, we connected a physical midi controller with lots of knobs and sliders to the application so that an operator/VJ could control aspects of visualization live.

As Little Dragon touched, tilted, and swiped the phones, hundreds of tiny bits of information were broadcasted out into our local network, with no particular destination in mind, without even a guarantee that it would arrive anywhere, open to be heard and interpreted by any of the musical or visual applications that were listening. This is in contrast to the way that these types of projects are usually set up, in which information has a definite destination -- in this case, the actions of the band were being used in so many different ways that their instruments were essentially just shouting into space.

Credits

Odd Division:

- Aramique - Partner/Director

- Jeff Crouse -Partner/Director

- Mau Morgo - Partner/Director

- Luisa Pereira - Interaction design and development

- Lars Berg - WebGL design and development

- Ranjit Bhatnagar - electronics whisperer

- 11th Street Workshop - fabrication partner

Commissioned by Protein on behalf of Samsung Mobile